Brain Observatory: What are those cells doing?#

This sandbox will help you analyze a dataset from the Allen Institute’s Brain Observatory.#

These datasets contain calcium imaging data for various different cell types in the visual cortex of the mouse. It’s likely that these cell types have different roles in the visual system – your mission is to figure out what these roles are. You will choose a visual area, a cell type, and look at their responses to natural stimuli.

By the end of this lesson, you will be able to:#

Examine a 2-photon imaging dataset for a particular cell type, in a specific visual area.

Use imshow to visualize two-dimensional images in Python.

Create plots of raw calcium imaging data.

Additional information on this dataset, and how it was collected, can be found here as well as in the SDK documentation.

Step 1. Importing toolboxes#

First, we’ll import the necessary toolboxes to run this code. The first chunk of “import” lines will bring in some standard toolboxes that we need. For example, “numpy” is a toolbox that has functions to work with large arrays. The second chunk of import lines brings in some toolboxes that the Allen Brain Observatory has already packaged, to help users analyze its data.

numpy, pandas, and matplotlib.pyplot as their conventional names.# Install allensdk if it isn't already there

try:

import allensdk

print("allensdk is already installed.")

except ImportError:

print("allensdk not found, installing now...")

!pip install allensdk

allensdk is already installed.

# Allen specific toolboxes

import allensdk.brain_observatory.stimulus_info as stim_info

from allensdk.core.brain_observatory_cache import BrainObservatoryCache

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

/opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/core/brain_observatory_nwb_data_set.py:43: UserWarning: pkg_resources is deprecated as an API. See https://setuptools.pypa.io/en/latest/pkg_resources.html. The pkg_resources package is slated for removal as early as 2025-11-30. Refrain from using this package or pin to Setuptools<81.

from pkg_resources import parse_version

Similar to how we created an instance of the CellTypesCache, here we’ll create an instance of the “BrainObservatoryCache.” The datahub already has a manifest file available in the directory you see below. This directory also has all of the data we need.

Note: You need to run this notebook in the Docker container that includes allen-brain-observatory (often denoted by ‘w/ allen’). Otherwise you will get a permissions error when you attempt to run the cell below.

# We will create an instance of the Brain Observatory Cache as an object, "boc."

boc = BrainObservatoryCache(manifest_file='manifest.json')

Step 2. Get a list of all possible transgenic mouse lines and brain areas, and choose which to work with.#

Next, we’ll ask that “boc” structure to tell us what all of the possible Cre lines and brain areas are that we can analyze. You’ll need to use these exact names when you’re trying to pull a specific one from the dataset.

# We'll save the list of cre lines as a variable, 'cre-lines'.

cre_lines = boc.get_all_cre_lines()

print("all cre lines: " + str(cre_lines))

# We'll save the list of possible structures as a variable, 'brain_areas'.

brain_areas = boc.get_all_targeted_structures()

print("all brain regions: " + str(brain_areas))

all cre lines: ['Cux2-CreERT2', 'Emx1-IRES-Cre', 'Fezf2-CreER', 'Nr5a1-Cre', 'Ntsr1-Cre_GN220', 'Pvalb-IRES-Cre', 'Rbp4-Cre_KL100', 'Rorb-IRES2-Cre', 'Scnn1a-Tg3-Cre', 'Slc17a7-IRES2-Cre', 'Sst-IRES-Cre', 'Tlx3-Cre_PL56', 'Vip-IRES-Cre']

all brain regions: ['VISal', 'VISam', 'VISl', 'VISp', 'VISpm', 'VISrl']

Task: Choose a visual area and Cre line from the lists above to examine in the rest of the notebook. Refer back to our lecture slides or the Brain Observatory landing page to learn more about these different visual areas. Primary cortex (VISp) is surrounded by several other brain regions, which have unknown functions.

You can find more info about the Cre-lines here here.

Create a new block of Markdown text below this one which briefly describes your visual area and Cre line. Remember that markdown is the other type of block that Jupyter supports. You can change your block to Markdown using the dropdown in the menu above. Check out this cheatsheet for a few handy elements of Markdown syntax.

Assign your visual area and cre line to

visual_areaandcre_line, respectively.

visual_area = 'VISp'

cre_line = 'Rorb-IRES2-Cre'

Step 3. Extract an experiment session.#

Use the

get_experiment_containers()method of ourbocobject to get a list of experiments that are available. This method takes the argumentstargeted_structures=as well ascre_lines=, which both require a list. Assign the outcome of this method toexperiments.Create a dataframe out of

expsand assign it toexps_df.There isn’t an experiment for every combination of Cre lines and visual areas above. Add an

ifstatement that will print a statement for you if the dataframe you created is empty (Hint: useemptyattribute).Show the head of your dataframe.

exps = boc.get_experiment_containers(targeted_structures=[visual_area],cre_lines =[cre_line])

exps_df = pd.DataFrame(exps)

exps_df.head()

| id | imaging_depth | targeted_structure | cre_line | reporter_line | donor_name | specimen_name | tags | failed | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 511510675 | 275 | VISp | Rorb-IRES2-Cre | Ai93(TITL-GCaMP6f) | 228786 | Rorb-IRES2-Cre;Camk2a-tTA;Ai93-228786 | [] | False |

| 1 | 586351979 | 275 | VISp | Rorb-IRES2-Cre | Ai93(TITL-GCaMP6f) | 304756 | Rorb-IRES2-Cre;Camk2a-tTA;Ai93-304756 | [] | False |

| 2 | 590168381 | 275 | VISp | Rorb-IRES2-Cre | Ai93(TITL-GCaMP6f) | 305467 | Rorb-IRES2-Cre;Camk2a-tTA;Ai93-305467 | [] | False |

| 3 | 511510989 | 275 | VISp | Rorb-IRES2-Cre | Ai93(TITL-GCaMP6f) | 222431 | Rorb-IRES2-Cre;Camk2a-tTA;Ai93-222431 | [] | False |

| 4 | 512124562 | 275 | VISp | Rorb-IRES2-Cre | Ai93(TITL-GCaMP6f) | 234831 | Rorb-IRES2-Cre;Camk2a-tTA;Ai93-234831 | [] | False |

Once you’ve successfully found a combination that works, you should have the head of your dataframe above. Let’s look into one of these experiment containers, most of which have three different sessions for different types of visual stimuli.

Pick an experiment from the table above. Copy the id in the “id” column and assign it to

experiment_container_id.Use the

get_ophys_experiments()method on ourbocobject, providing it with the argumentsexperiment_container_ids=andstimuli=[‘natural_scenes’]. This will restrict our search to experiments with our ID and where natural stimuli were shown. Assign this toexpt_cont.Look at the

expt_contobject. What kind of object is this?

experiment_container_id = 512124562

expt_cont = boc.get_ophys_experiments(experiment_container_ids=[experiment_container_id],stimuli=['natural_scenes'])

type(expt_cont)

expt_cont

[{'id': 512124564,

'imaging_depth': 275,

'targeted_structure': 'VISp',

'cre_line': 'Rorb-IRES2-Cre',

'reporter_line': 'Ai93(TITL-GCaMP6f)',

'acquisition_age_days': 80,

'experiment_container_id': 512124562,

'session_type': 'three_session_B',

'donor_name': '234831',

'specimen_name': 'Rorb-IRES2-Cre;Camk2a-tTA;Ai93-234831',

'fail_eye_tracking': False}]

Now, let’s get the id for this experiment and extract the data using the get_ophys_experiment_data method.

Programmatically, assign the ‘id’ of your

expt_contobject tosession_id.Use the

get_ophys_experiment_data()method, simply giving it your session_id as an argument, and assign the output of this todata.What kind of object is data?

session_id = expt_cont[0]['id']

data = boc.get_ophys_experiment_data(session_id)

data

<allensdk.core.brain_observatory_nwb_data_set.BrainObservatoryNwbDataSet at 0x1055fca10>

Step 4. Download & inspect the natural scenes imaging session#

First, we’ll look at the session where the mouse viewed natural scenes.

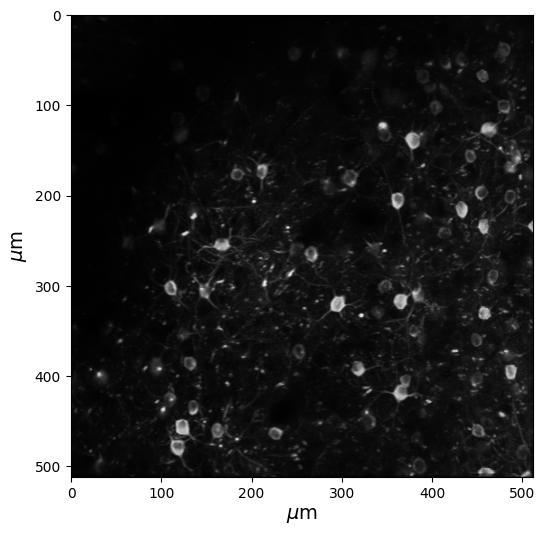

Let’s take a quick look at the data you just acquired. We’ll take a maximum projection of the data, so that we can actually see the cells. If we just looked at one snapshot of the raw imaging data, the cells would look dim. A “maximum projection image” shows us the maximum brightness for each pixel, across the entire experiment.

Below, we are using the get_max_projection() method on our data, and then using the imshow() method in order to see our projection.

Note: The weird text for the ylabel is called “TeX” markup, in order to get the greek symbol mu (\(\mu\)). See documentation here.

# Get the maximum projection (a numpy array) of our data

max_projection = data.get_max_projection()

# Create a new figure

fig = plt.figure(figsize=(6,6))

# Use imshow to visualize our numpy array

plt.imshow(max_projection, cmap='gray')

# Add labels for microns; weird syntax below is to get the micro sign

plt.ylabel(r'$\mu$m',fontsize=14)

plt.xlabel(r'$\mu$m',fontsize=14)

plt.show()

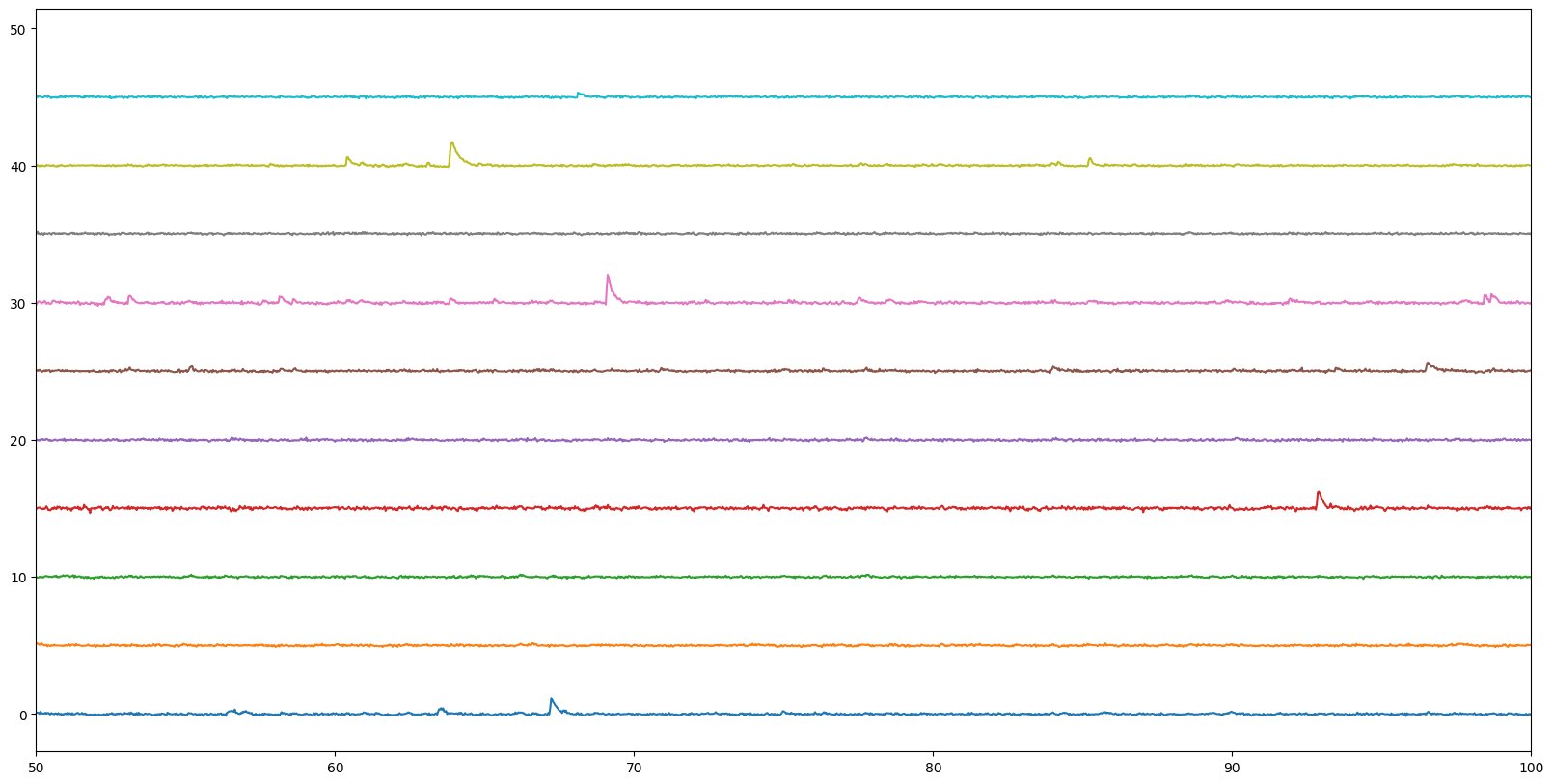

Step 5. Look at the calcium transients of your cells#

Now we’ll plot the data of each of our cells (from the field of view above) across time. Each line shows the change in fluorescence over baseline (\(\Delta\))F/F) of the individual cells. When there are sharp increases, that’s when the cells are responding.

Create a for loop that will plot, in succession, the responses of the first ten cells of your dataset, contained in dff. Use ‘ts’ (the timestamps of the data) for the x axis.

Add informative labels to your plot. What is the x axis? What’s the y axis?

Use

plt.xlim()to zoom in on an interesting part of the data.Inspect the data here – how fast are these changes in fluorescence happening?

Challenge: How could you write this loop so that your traces wouldn’t be overlapping?

# Use the `get_dff_traces()` method to return both timestamps (ts, in seconds) as well as the deltaF/F (dff)

ts, dff = data.get_dff_traces()

# Set up a figure

fig = plt.figure(figsize=(20,10))

offset = 0

# Your plotting script below

for neuron in range(10):

plt.plot(ts,dff[neuron]+offset)

plt.xlim([50,100])

offset = offset + 5

plt.show()

Step 6. Look at the response of your cells to natural scenes#

Hmm, there are some responses above, but it’s tough to see what’s going on with just the raw traces. Let’s instead see how these cells actually responded to different types of images. To do so, we’ll need to use the get_cell_specimens() method on our boc, giving it the name of the experiment container ID to look for. The dataframe that this creates will have a lot more information about what the cells in our experiment prefer.

# Get the cell specimens information for this session

cell_specimens = boc.get_cell_specimens(experiment_container_ids=[experiment_container_id])

cell_specimens_df = pd.DataFrame(cell_specimens)

cell_specimens_df.head()

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

Cell In[11], line 2

1 # Get the cell specimens information for this session

----> 2 cell_specimens = boc.get_cell_specimens(experiment_container_ids=[experiment_container_id])

3 cell_specimens_df = pd.DataFrame(cell_specimens)

4 cell_specimens_df.head()

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/core/brain_observatory_cache.py:489, in BrainObservatoryCache.get_cell_specimens(self, file_name, ids, experiment_container_ids, include_failed, simple, filters)

439 """Return cell specimens that have certain properies.

440

441 Parameters

(...) 484 list of dictionaries

485 """

487 file_name = self.get_cache_path(file_name, self.CELL_SPECIMENS_KEY)

--> 489 cell_specimens = self.api.get_cell_metrics(

490 path=file_name,

491 strategy="lazy",

492 pre=lambda x: [y for y in x],

493 **Cache.cache_json(),

494 )

496 cell_specimens = self.api.filter_cell_specimens(

497 cell_specimens,

498 ids=ids,

(...) 501 filters=filters,

502 )

504 # drop the thumbnail columns

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/api/warehouse_cache/cache.py:661, in cacheable.<locals>.decor.<locals>.w(*args, **kwargs)

658 if decor.post and not 'post in kwargs':

659 kwargs['post'] = decor.post

--> 661 result = Cache.cacher(func,

662 *args,

663 **kwargs)

664 return result

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/api/warehouse_cache/cache.py:384, in Cache.cacher(fn, *args, **kwargs)

382 if writer:

383 data = fn(*args, **kwargs)

--> 384 data = pre(data)

385 writer(path, data)

386 else:

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/core/brain_observatory_cache.py:492, in BrainObservatoryCache.get_cell_specimens.<locals>.<lambda>(x)

439 """Return cell specimens that have certain properies.

440

441 Parameters

(...) 484 list of dictionaries

485 """

487 file_name = self.get_cache_path(file_name, self.CELL_SPECIMENS_KEY)

489 cell_specimens = self.api.get_cell_metrics(

490 path=file_name,

491 strategy="lazy",

--> 492 pre=lambda x: [y for y in x],

493 **Cache.cache_json(),

494 )

496 cell_specimens = self.api.filter_cell_specimens(

497 cell_specimens,

498 ids=ids,

(...) 501 filters=filters,

502 )

504 # drop the thumbnail columns

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/core/brain_observatory_cache.py:492, in <listcomp>(.0)

439 """Return cell specimens that have certain properies.

440

441 Parameters

(...) 484 list of dictionaries

485 """

487 file_name = self.get_cache_path(file_name, self.CELL_SPECIMENS_KEY)

489 cell_specimens = self.api.get_cell_metrics(

490 path=file_name,

491 strategy="lazy",

--> 492 pre=lambda x: [y for y in x],

493 **Cache.cache_json(),

494 )

496 cell_specimens = self.api.filter_cell_specimens(

497 cell_specimens,

498 ids=ids,

(...) 501 filters=filters,

502 )

504 # drop the thumbnail columns

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/api/queries/rma_pager.py:58, in RmaPager.pager(fn, *args, **kwargs)

56 while result_count == num_rows:

57 kwargs['start_row'] = start_row

---> 58 data = fn(*args, **kwargs)

60 start_row = start_row + num_rows

61 result_count = len(data)

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/api/queries/brain_observatory_api.py:359, in BrainObservatoryApi.get_cell_metrics(self, cell_specimen_ids, *args, **kwargs)

345 """Get cell metrics by id

346

347 Parameters

(...) 354 dict : cell metric metadata

355 """

357 order = kwargs.pop("order", ["'cell_specimen_id'"])

--> 359 data = self.template_query(

360 "brain_observatory_queries",

361 "cell_metric",

362 cell_specimen_ids=cell_specimen_ids,

363 order=order,

364 *args,

365 **kwargs,

366 )

368 return data

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/api/queries/rma_template.py:132, in RmaTemplate.template_query(self, template_name, entry_name, **kwargs)

128 query_args['order'] = template['order']

130 query_args.update(kwargs)

--> 132 data = self.model_query(**query_args)

134 return data

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/api/queries/rma_api.py:257, in RmaApi.model_query(self, *args, **kwargs)

217 def model_query(self, *args, **kwargs):

218 '''Construct and execute a model stage of an RMA query string.

219

220 Parameters

(...) 255 response, including the normalized query.

256 '''

--> 257 return self.json_msg_query(

258 self.build_query_url(

259 self.model_stage(*args, **kwargs)))

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/api/api.py:164, in Api.json_msg_query(self, url, dataframe)

147 def json_msg_query(self, url, dataframe=False):

148 ''' Common case where the url is fully constructed

149 and the response data is stored in the 'msg' field.

150

(...) 161 returned data; type depends on dataframe option

162 '''

--> 164 data = self.do_query(lambda *a, **k: url,

165 self.read_data)

167 if dataframe is True:

168 warnings.warn("dataframe argument is deprecated", DeprecationWarning)

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/api/api.py:204, in Api.do_query(self, url_builder_fn, json_traversal_fn, *args, **kwargs)

200 api_url = url_builder_fn(*args, **kwargs)

202 post = kwargs.get('post', False)

--> 204 json_parsed_data = self.retrieve_parsed_json_over_http(api_url, post)

206 return json_traversal_fn(json_parsed_data)

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/api/api.py:369, in Api.retrieve_parsed_json_over_http(self, url, post)

366 self._log.info("Downloading URL: %s", url)

368 if post is False:

--> 369 data = json_utilities.read_url_get(

370 requests.utils.quote(url,

371 ';/?:@&=+$,'))

372 else:

373 data = json_utilities.read_url_post(url)

File /opt/miniconda3/envs/jb_py311/lib/python3.11/site-packages/allensdk/core/json_utilities.py:115, in read_url_get(url)

98 def read_url_get(url):

99 """Transform a JSON contained in a file into an equivalent

100 nested python dict.

101

(...) 113 the output will be of the corresponding type.

114 """

--> 115 response = urllib_request.urlopen(url)

116 json_string = response.read().decode("utf-8")

118 return json.loads(json_string)

File /opt/miniconda3/envs/jb_py311/lib/python3.11/urllib/request.py:216, in urlopen(url, data, timeout, cafile, capath, cadefault, context)

214 else:

215 opener = _opener

--> 216 return opener.open(url, data, timeout)

File /opt/miniconda3/envs/jb_py311/lib/python3.11/urllib/request.py:519, in OpenerDirector.open(self, fullurl, data, timeout)

516 req = meth(req)

518 sys.audit('urllib.Request', req.full_url, req.data, req.headers, req.get_method())

--> 519 response = self._open(req, data)

521 # post-process response

522 meth_name = protocol+"_response"

File /opt/miniconda3/envs/jb_py311/lib/python3.11/urllib/request.py:536, in OpenerDirector._open(self, req, data)

533 return result

535 protocol = req.type

--> 536 result = self._call_chain(self.handle_open, protocol, protocol +

537 '_open', req)

538 if result:

539 return result

File /opt/miniconda3/envs/jb_py311/lib/python3.11/urllib/request.py:496, in OpenerDirector._call_chain(self, chain, kind, meth_name, *args)

494 for handler in handlers:

495 func = getattr(handler, meth_name)

--> 496 result = func(*args)

497 if result is not None:

498 return result

File /opt/miniconda3/envs/jb_py311/lib/python3.11/urllib/request.py:1377, in HTTPHandler.http_open(self, req)

1376 def http_open(self, req):

-> 1377 return self.do_open(http.client.HTTPConnection, req)

File /opt/miniconda3/envs/jb_py311/lib/python3.11/urllib/request.py:1352, in AbstractHTTPHandler.do_open(self, http_class, req, **http_conn_args)

1350 except OSError as err: # timeout error

1351 raise URLError(err)

-> 1352 r = h.getresponse()

1353 except:

1354 h.close()

File /opt/miniconda3/envs/jb_py311/lib/python3.11/http/client.py:1395, in HTTPConnection.getresponse(self)

1393 try:

1394 try:

-> 1395 response.begin()

1396 except ConnectionError:

1397 self.close()

File /opt/miniconda3/envs/jb_py311/lib/python3.11/http/client.py:325, in HTTPResponse.begin(self)

323 # read until we get a non-100 response

324 while True:

--> 325 version, status, reason = self._read_status()

326 if status != CONTINUE:

327 break

File /opt/miniconda3/envs/jb_py311/lib/python3.11/http/client.py:286, in HTTPResponse._read_status(self)

285 def _read_status(self):

--> 286 line = str(self.fp.readline(_MAXLINE + 1), "iso-8859-1")

287 if len(line) > _MAXLINE:

288 raise LineTooLong("status line")

File /opt/miniconda3/envs/jb_py311/lib/python3.11/socket.py:718, in SocketIO.readinto(self, b)

716 while True:

717 try:

--> 718 return self._sock.recv_into(b)

719 except timeout:

720 self._timeout_occurred = True

KeyboardInterrupt:

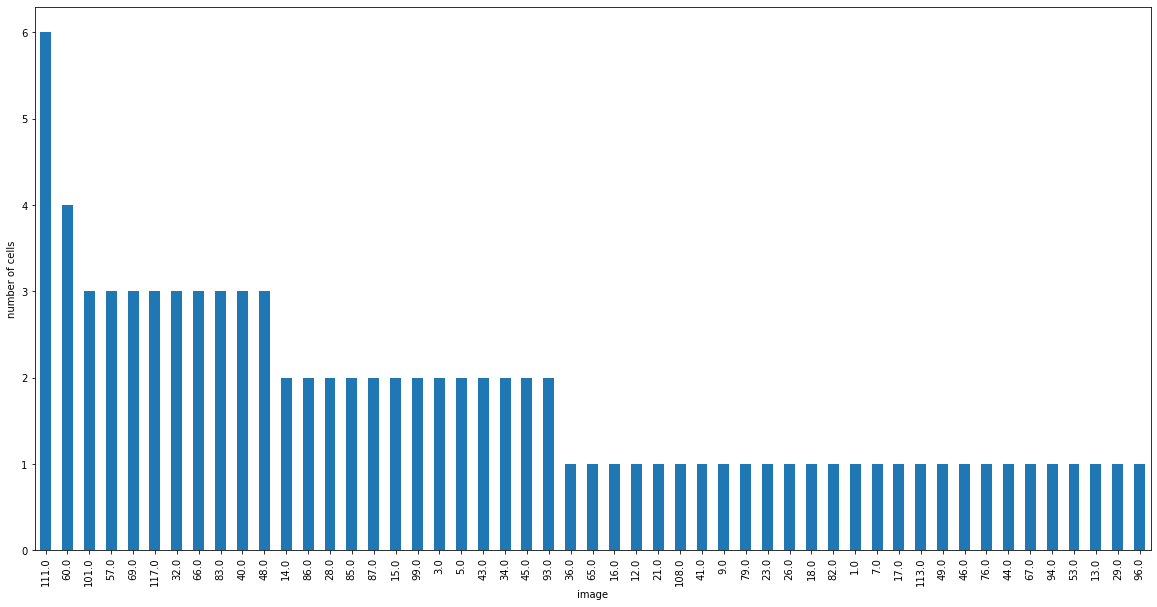

Let’s create a histogram of preferred images in our dataset.

Create a subset of this dataframe called ‘sig_cells’ where the column ‘p_ns’ is less than 0.05. In other words, we only want cells that significantly preferred a specific image.

The preferred image ID is in the ‘pref_image_ns’ column of this dataframe. Assign this to ‘pref_images’.

Create a histogram of preferred images with 118 bins (there are 118 different images). There are multiple ways to do this!

sig_cells = cell_specimens_df[cell_specimens_df['p_ns']<0.05]

pref_images = sig_cells['pref_image_ns']

fig = plt.figure(figsize=(20,10))

pref_images.value_counts().plot(kind='bar')

plt.xlabel('image')

plt.ylabel('number of cells')

plt.show()

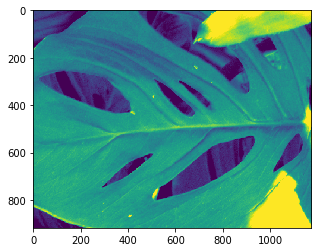

Wait, but what is that image? In order to actually see what this stimulus is, first, we’ll organize the stimulus table. This tells us which stimulus was played on each trial. This data set has 118 different scenes, and each scene is presented 50 times. Images of the scenes can be found here.

Assign your top image to

image_id. You can do this programmatically, or simply hard code it.The ‘natural_scene_template’ that we create below is a numpy array, where the first dimension is the image id, and the second and third are the values of the image. You want to plot

natural_scene_template[image_id,:,:]Use theimshow()method to show the image, just as we did with our maximum projection above.

natural_scene_table = data.get_stimulus_table('natural_scenes')

natural_scene_template = data.get_stimulus_template('natural_scenes')

sceneIDs = np.unique(natural_scene_table.frame)

# Choose your image id

image_id = 111

plt.imshow(natural_scene_template[image_id,:,:])

plt.show()

# Plot this natural scene

Step 7. Examine the direction selectivity of your cell#

Sometimes, the function of a cell is not particularly clear from natural stimuli. Those stimuli have a lot of information in them, and it might be hard to tell what a cell is actually responding to. Instead, we can use simple drifting gratings to look at one straightforward property of a cell: does it respond to specific directions of movement?

We can use the columns that look at the direction selectivity index (DSI) in order to determine whether our cells are direction selective (typically considered having a DSI > 0.5). Take another look at the cell_specimens_df we created above. How would you analyze the data here to see if your cells were direction selective? How would you go back and compare these data to that of other cell types?